A vision sensor is a complete module having optics, a Centeye vision chip, a processor, and firmware, all designed holistically to accomplish a particular machine vision task. Below is a sampling of vision sensors we have fabricated over the past 15 years.

1999: Pre-Centeye at NRL

As an employee at the Naval Research Laboratory, Centeye’s founder Geoffrey L Barrows fabricated the first ever optical flow sensor to be flown on an aircraft. Notice the point-to-point wiring and trimmer potentiometers for bias adjustment. Details of the construction of this sensor may be found in Barrows’ Ph.D. dissertation as well as in US Patents 6,020,953 and 6, 384,905. Mass: About 27 grams. Vision chip: unnamed chip with 36 pixels and analog edge enhancement. Processor: PIC 16C7xx chip running at 1 MIPS. Function: Cause glider to turn away from walls when approached too close.

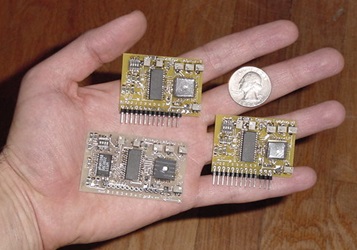

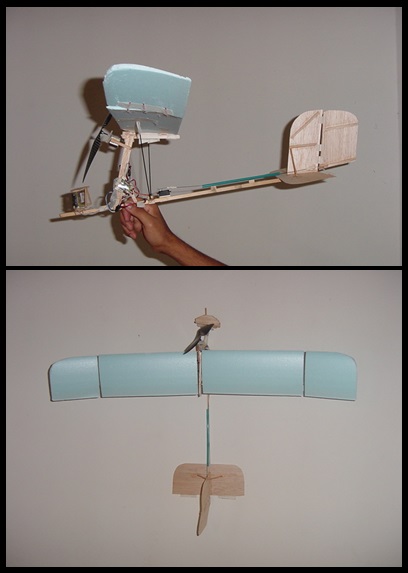

2001: Pre-Ladybug sensors

Under support from DARPA, Centeye fabricated several optical flow sensors and flew them on a foam fixed-wing aircraft to demonstrate altitude hold using optical flow. These sensors used a “pre-Ladybug” vision chip incorporating analog edge enhancement and detection, and a PIC 18F-series microcontroller. The sensitive vision chip circuitry allowed the measurement of optical flow even when the texture comprised a moving blank sheet of paper.

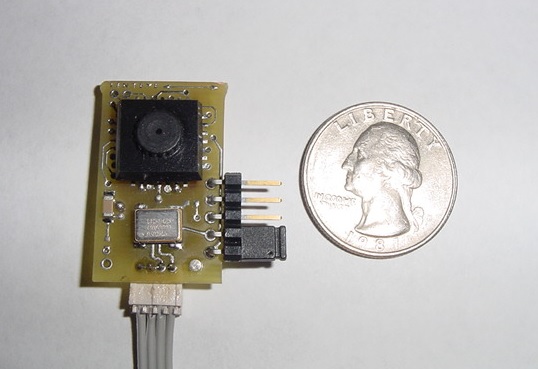

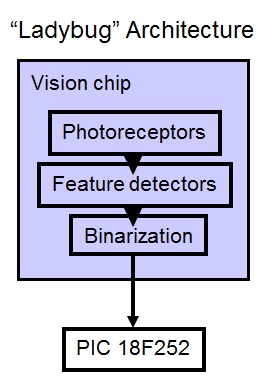

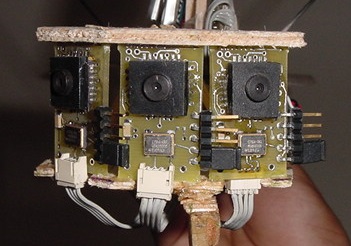

2002-2003: Ladybug and obstacle avoidance

Our first Ladybug sensors were prototyped in 2002 and improved in 2003. The vision chip was designed in late 2001 and included four rows of 22 pixels (88 total) with analog edge detector circuitry. The circuitry was sensitive enough to allow altitude hold over snow on a cloudy day. We finally achieved repeatable obstacle avoidance in August 2003 by improving the optics and designing an extremely agile fixed-wing aircraft. The Ladbug sensor was our most popular and well-known sensor in the early 2000s.

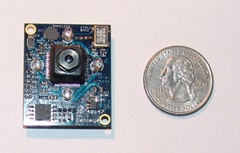

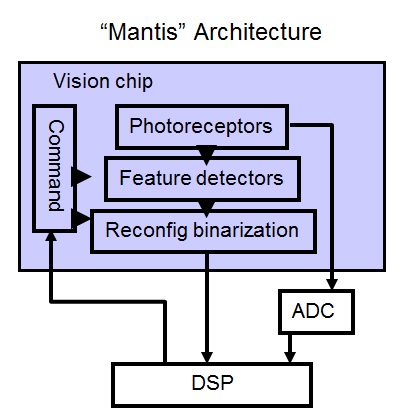

2005: Mantis sensor

The Mantis sensor was our first 2D optical flow sensor, using the 40×47 Mantis vision chip including reconfigurable feature detectors and a multi-layer output. The processor was a TMS320 5000-series DSP running at 200MHz. Four LEDs provided illumination in the dark.

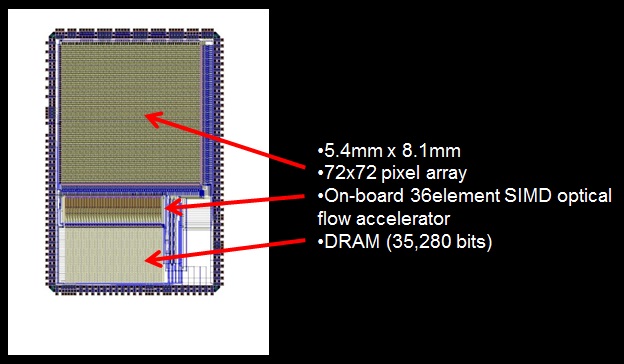

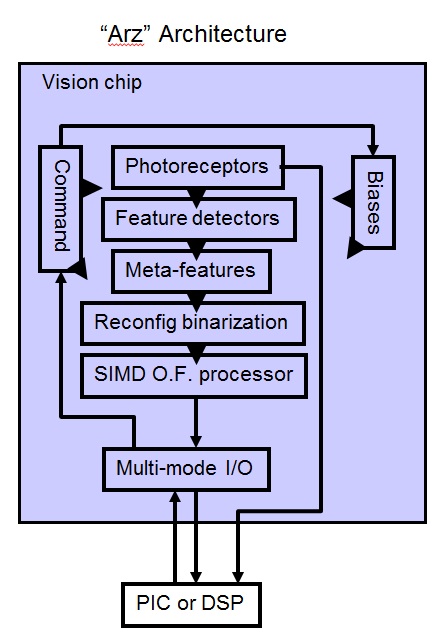

2007: Arz sensor

The Arz sensor incorporated the 72×72 Arz vision chip, our most complex vision chip ever. This vision chip was designed over a 6 month period and included a 72×72 array of logarithmic pixel arrays, X and Y direction edge detectors and reconfigurable feature detectors, and a 36 element SIMD optical flow accelerator. Together with a TMS320 5000-series DSP running at 200MHz, this sensor could compute optical flow over the 72×72 array at over 300Hz, and do so in a package weighing just a few grams. The Arz sensor was incorporated into our first MAOS hemisphere vision sensor.

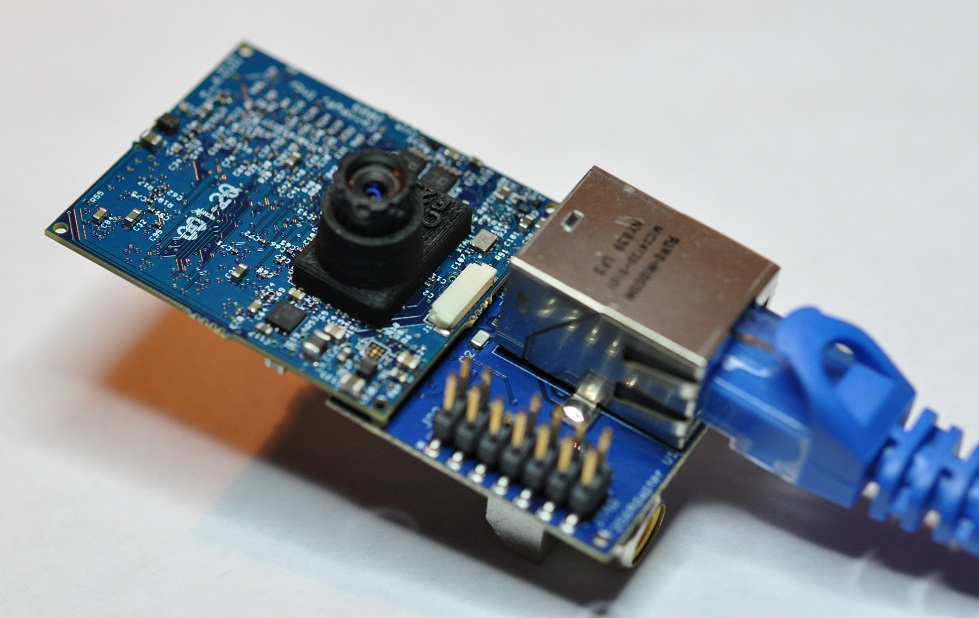

2009: Faraya256 sensor

The Faraya256 sensor was our most complex vision sensor. It incorporated a 256×256 flexible resolution Faraya vision chip, coupled with a Texas Instruments DaVinci DSP optimized for image processing. It could process optical flow at 64×64 resolution at 350Hz, or operate at different resolutions with different corresponding speeds. This vision sensor was incorporated in our MAOS2 hemisphere vision system.

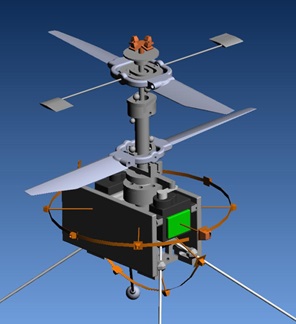

2009: Hover Ring

Centeye participated in DARPA’s Nano Air Vehicle program, with the goal of providing vision sensors for vision based flight control and obstacle avoidance. The rigorous emphasis on small size forced us to take a more minimalist approach to vision sensing and produce extremely light vision sensors. The first of these is the hover ring used to allow vision-based hover in place. The sensor comprised a 32-bit Atmel microcontroller running at 60MHz and eight (8) Faraya64 vision chips mounted on a flexible ring. The mass of the entire vision system, including processor board, chips, optics, and ring, was between 3 and 5 grams depending on the configuration. Below you see both a rendering we showed in our proposed work to DARPA, and the actual resulting ring. Yes, sometimes the final system does look like the proposed rendering.

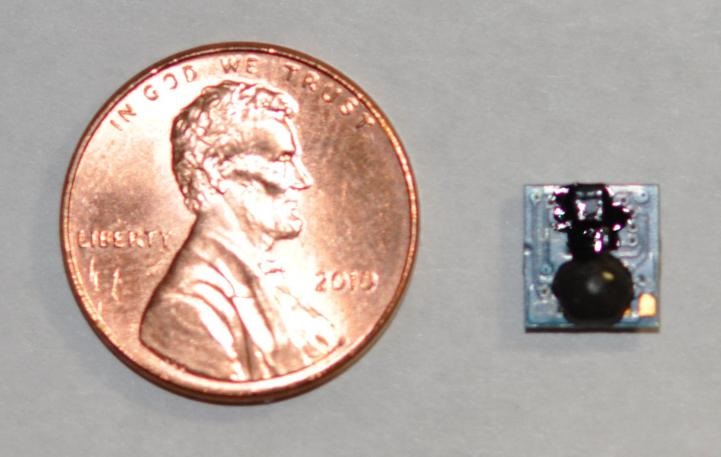

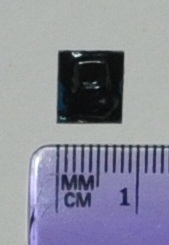

2010: Tiny Tam

Participation in the NSF-funded Harvard Robobees project forced us to pursue yet smaller vision sensors. The TinyTam sensor had a 16×16 vision chip and an Atmel AT-Tiny processor. The sensor weighted just 0.125 grams (1/8 of a gram) and measured optical flow at 20Hz. We believe this is the lightest optical flow sensor ever fabricated. Unfortunately it was a bit ahead of it’s time…

2011: Sub-gram hover in place

We later demonstrated we could achieve hover in place in a mass of less than a gram. A 208 milligram single camera, incorporating a Stonyman vision chip and our proprietary flat wide field of view optics, coupled with an Atmel 32-bit processor, provided the vision sensing necessary to hover a 6 inch span helicopter.

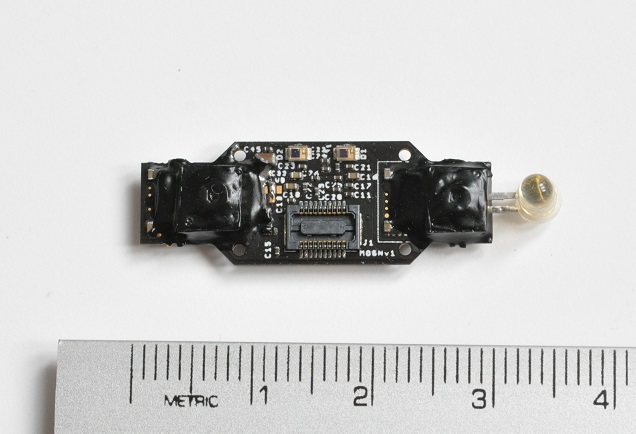

2016: 1 gram multi-mode stereo sensor

This is the latest sensor that is currently being used in flight tests on our nano quadrotor platform

- 2 x Centeye RockCreek™ vision chips, with two-mode pixels and analog contrast enhancement/edge detection circuitry

- Custom wide field of view (up to 150°) optics

- Optical flow

- Stereo vision

- Laser ranging

- LED illumination

- Mass: 1.0 grams

- Power: As little as 60 mA @ 4V

- Neural network-based attention modulation