Centeye’s Perception and Autonomy Suite is a collection of hardware and software components designed to provide micro- and nano-class drones with advanced visual perception of the drone’s surroundings and position relative to the surroundings in a manner that supports autonomous flight in close-ground, indoor, and cluttered environments.

Hardware components:

- Centeye vision chips, the only vision / image sensor chips specifically designed and optimized for small mobile platform autonomy.

- Wide field of view optics supporting up to 150 degree fields of view and designed by Centeye for use with our vision chips.

- Sensor boards, including monocular and stereo configurations.

- Support electronics.

Software components:

- Firmware algorithms to operate vision chips and acquire raw imagery or partially processed imagery computed with on-chip analog circuitry.

- Image processing algorithms such as optical flow, stereo depth perception, proximity sensing, area ID feature extraction, etc.

- Global perception algorithms, for example to create depth- or threat-maps, to estimate drone state relative to the environment, or to construct a “fingerprint” vector of a room or area that could be used to support topological maps.

- Middle loop reactive control algorithms for stability, obstacle avoidance, and navigating around or between obstacles or down tunnels.

- Limited outer loop control for egress after ingress or flight between state-derived waypoints.

Centeye’s perception and autonomy suite is generally used for “middle loop” control, in between “outer loops” such as GPS-based or similar waypoint navigation or even human operator input and “inner loops” such as for pose control. For our own use, we generally accept as input “control stick” information from a human operator, re-interpret these inputs as requested actions, and generate as an output synthetic control signals to a flight control unit (FCU).

This technology is structured as a suite of modular components rather than as a single “autonomy black box”. We get asked all the time why we do not simply develop an “autonomy black box” that can be attached to any drone to provide it with autonomy. The answer is simple: The needs for autonomy are extremely diverse and varied, thus a “one size fits all” configuration is simply not possible. Instead, we find it is best to work with clients to provide the right collection of the above components for their application. Accordingly an integral part of our product offering includes advice and support to produce the right combination for an application.

Usage Architectures

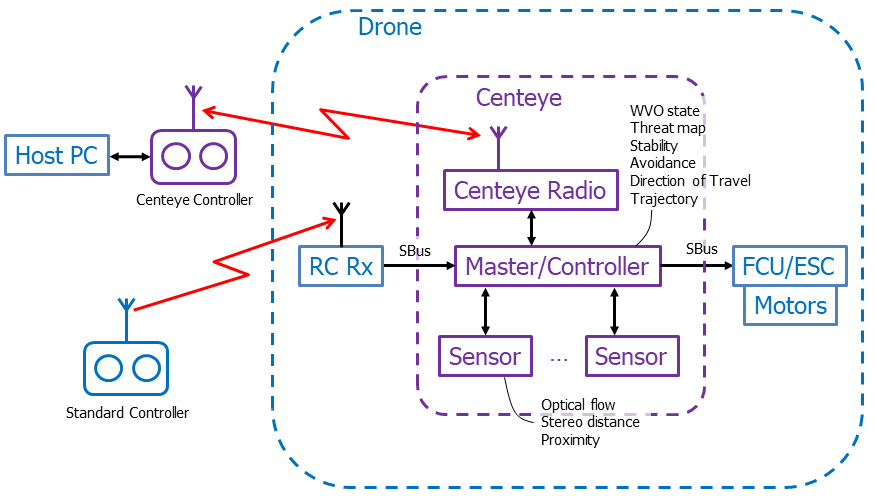

The diagram below shows how we prefer to use our technology for internal work. Centeye’s technology suite serves as an interface between operator-generated control input and a drone’s flight control electronics. A similar configuration, for our work using a Crazyflie 2.0 drone platform, uses only the Centeye controller and has the Master/Controller communicating with the Crazyflie via I2C instead of SBus.

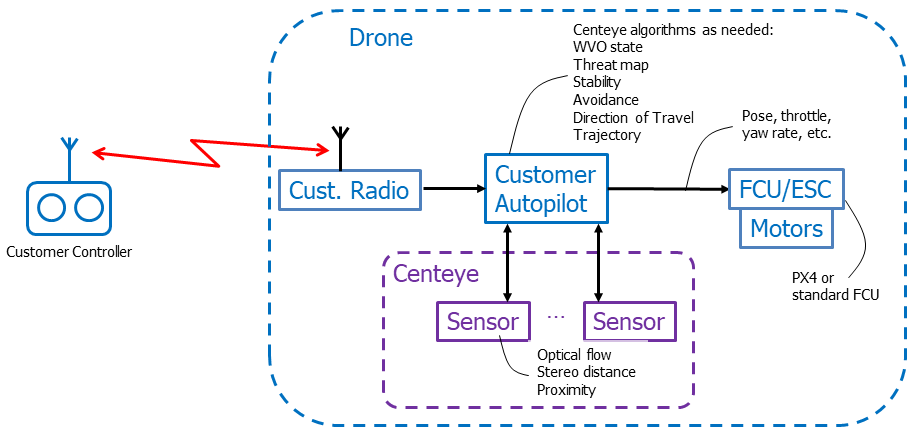

Most clients will want to use their own autopilot board and/or central processor board, perhaps to incorporate other mission-specific sensors. In this case, the architecture below may be more appropriate.