Drone obstacle avoidance in 2003: 264 pixels and 8-bit processors

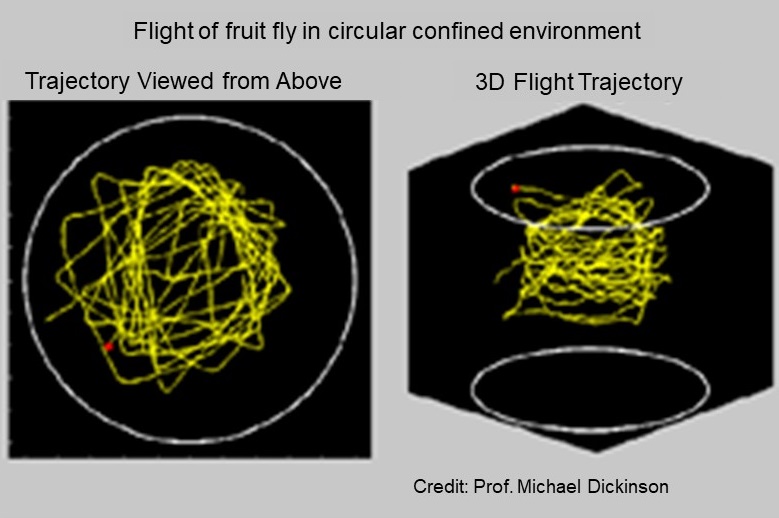

Figure 1: (left) Foam drone with optical flow sensor mounted under a wing, Summer 2001. (right) Foam drone with optical flow sensors for attempted obstacle avoidance, Summer 2002.

Today it is universally acknowledged that drones operating close to the ground need some sort of obstacle avoidance. What I am about to tell is the story of our own first attempt at putting obstacle avoidance on a drone, during the years 2001 through 2003, using neuromorphic artificial insect vision hardware. I enjoy telling this story, since the lessons learned run contrary to even current practice but, upon reflection, should be common sense. I learned an important lesson that is still relevant today: The best results are obtained with a systemic approach in which the drone and any obstacle avoidance hardware are holistically co-designed. This contrasts with the popular approach in which obstacle avoidance is a modular component that can be simply added to a drone.

Initial Successes with Altitude Hold

As discussed in my last post, by taking inspiration from the vision systems of insects, we implemented interesting vision-based behaviors on drones using only several hundred pixels. Nineteen years ago, in 2001, we built an optical flow sensor using a 24-pixel neuromorphic vision chip and a modest 8-bit microcontroller. We mounted the sensor on the bottom of one wing of a fixed-wing model airplane to view the ground (Figure 1 left, above). The sensor was programmed to measure front-to-back optical flow and from that estimate the airplane’s height above ground. We implemented a simple proportional control rule that would increase the aircraft’s throttle or elevator pitch as optical flow increased. The system worked quite well- once the aircraft was set to the target height, it would fly for as long as the battery lasted (and wind conditions permitted). The sensor only operated the throttle or elevator- the human operator would still steer the aircraft with the rudder via a standard RC control stick. We verified the ability of the sensor to ascend or descend gentle slopes. We even later achieved similar results over unbroken snow on a cloudy day!

You can see one of the videos here, which I shared previously: https://youtu.be/EkFh_2UX-Jw You’ll hear a tone in the video. Our telemetry was laughably simple- The sensor transmitted this tone by on-off keying an RF module at a rate that varied with optical flow. The camcorder operator carried a scanner (set to AM mode) that received and audibly outputted that tone, allowing it to be recorded with the video of the flight.

I look back fondly at the scrappiness of our approach! I personally designed the vision chip using free and open source tools (Magic and Spice) on a $1000 laptop running Linux. I then had the chip fabricated by the MOSIS service for $1200. Our most expensive piece of lab equipment was a PIC microcontroller emulator that I think cost me about $2500 at the time. I find it interesting that a “fabless semiconductor” startup today “needs” tens of millions of dollars to start, but that is a topic for another post. Going from the design of the vision chip (December 2000) to the first successful flight (July 2001) took eight months, much of it learning how to build and control the model airplane!

Failed Attempts at Obstacle Avoidance

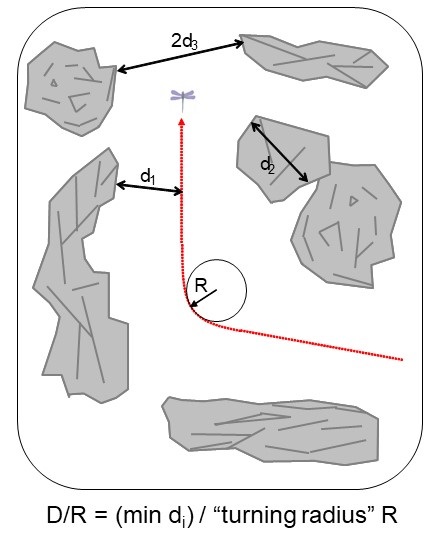

After getting “altitude hold” under control, I decided to next tackle obstacle avoidance, again using optical flow. I drew inspiration from the work of noted biologist (and MacArthur grant winner) Michael Dickinson- His laboratory then recently recorded the 3D flight paths made by fruit flies in a chamber and then analyzed how the flight path turned in response to an obstacle, in this case the wall of the chamber (Figure 2). Not surprisingly, the flies tended to travel in straight lines until they were adequately close to the wall, at which point they would execute a sharp turn away. An analysis of the flight paths and the environment showed that the turn reliably happened whenever the optical flow due to the approaching wall crossed a threshold. The fly would then resume forward flight in the new direction until another part of the wall was reached.

Figure 2: 3D flight paths made by fruit flies in a confined arena. Apologies for the poor image quality.

Back in 2001, this was a behavior that I thought could be implemented with a few optical flow sensors. My first attempt had just two optical flow sensors mounted on the aircraft to view diagonally forward-left and forward-right. The controller was programmed to detect if the optical flow was high on one side, at which time the controller would turn the aircraft’s rudder to steer away for a fixed duration. Here is a video clip of an early test: https://youtu.be/Ao3rhiQR0BM In this case the tone indicated the rudder actuation- a middle pitch indicated neutral e.g. no actuation, while a high or low tone indicated an attempt by the rudder to turn one direction or the other. It was comical- the optical flow sensor picked up the tree and initiated a turn, but not soon enough to avoid a collision!

We spent almost two years trying to improve that demonstration. I designed improved neuromorphic vision chips (with 88 pixels), then we made lighter yet more powerful versions of the optical flow sensors. We even experimented with different optical flow algorithms by varying the firmware on the microcontroller while still using the same vision chip as a front-end. Figure 1, above right, shows one iteration. It had four sensors- one downward to control height and three forward to detect obstacles. We added a proper telemetry downlink that allowed us to record and display data such as optical flow measurements, aircraft yaw rate, and control responses. We still did not achieve improved obstacle avoidance. We did, however, produce a graphical display showing three beautifully hilarious sequential events: First the increased optical flow due to the looming tree, then the control response by the rudder, and finally a sharp spike in the yaw rate as the aircraft slammed into the tree! The only thing that was “improved” was the monetary value of the electronics we had to fish out of a tree after each crash…

Eureka!

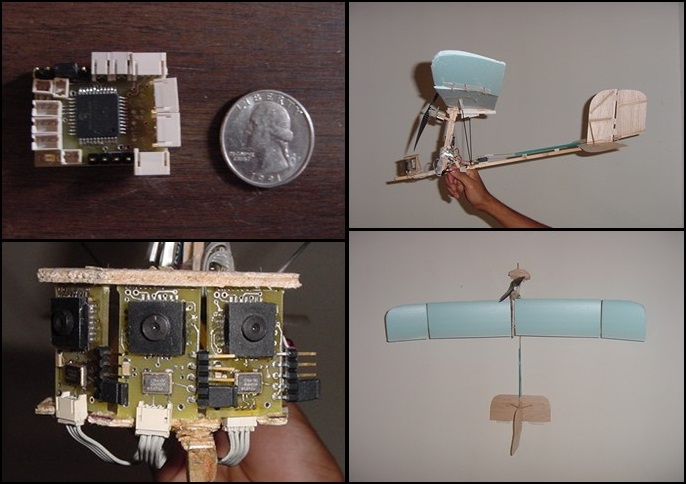

It is human nature, when trying to solve a difficult problem, to go down the same general path and bang your head against a dead end until you accept that you are indeed at a dead end and need a different approach. In my case, the epiphany came when I took a second look at the flight paths recorded by Prof. Dickinson. I saw something that I had missed before. Sure, the flies flew a straight line and then made sharp turns upon detecting an obstacle. What I had missed, however, was the sharpness of the turn- the arc flown during the turn had a radius of a few centimeters. More significantly, the turn was made about ten centimeters away from the wall. I imagined a parameter “R”, which might be the “turning radius” of the insect (Figure 3). A “turning radius” is not a native measure of the maneuverability of a flying insect or drone in the same way that it is for a ground vehicle. However, it does serve as a first-order approximation. I then imagined a parameter “D”, which might be the size of an obstacle to avoid or the distance from an obstacle at which the drone or insect would turn to avoid it.

Figure 3: D/R ratio

I then realized that what is critical is the ratio of the two: D/R. This ratio is basically a normalization distance relative to maneuverability. In the fruit fly case, D/R was perhaps 10 cm divided by a few centimeters, or a value of around two to four. Now consider the aircraft we had been using- it was a foam “park flyer” type designed to be easy to fly and control, using only a rudder for steering, and thus by design had a limited maneuverability. Furthermore, we loaded it up with sensors, telemetry, and other support electronics which further reduced its ability to turn. It’s R value was perhaps 20 meters if not more! To achieve a similar D/R of two to four, it would need to start avoiding the tree at a distance of 40 to 80 meters or more. At that distance, the small tree we were trying to avoid was simply too small in the visual field for our sensors to detect. At that turning radius, our implementation was better suited to avoid a large building, mountain, or cliff, rather than a single tree.

I was eager to achieve a successful demonstration of obstacle avoidance. Our sponsor at the time (DARPA) made it clear they required it. When I had the insight of D/R, I realized what we needed to do: We needed to boost the D/R of our system, and the most direct way was to find ways to decrease R e.g. make the aircraft much more maneuverable. First, we found a way to shave a few grams off the sensor mass while modestly improving their performance. We then simplified the control and support electronics- We took out the telemetry downlink to a human operator, built a simpler controller board, and found lighter cabling. Finally, we scratch-built a new model aircraft from balsa wood, pine, and foam. The resulting aircraft (Figure 4) was smaller, at half the wingspan, and at least an order of magnitude lighter. It was ugly, inefficient, and made any aeronautical engineer who looked at it cringe. But it had what I, a chip designer by training but perhaps armed with a fundamental grasp of physics, realized would make the aircraft turn- a giant rudder!

Figure 4: Aircraft with improved D/R used to demonstrate obstacle avoidance

After honing the aircraft design, it took just a few weeks of tuning to get it to avoid a tree line. The result is this video that I shared previously: https://youtu.be/qxrM8KQlv-0

Lessons Learned

So, was it possible almost two decades ago to provide a drone with obstacle avoidance using the technology available then? Absolutely. In fact, we probably could have done this in 1990s or even in the 1980s if we knew then what we know now. But it would not have been accomplished merely by adding obstacle avoidance to the drone. It would have also required designing the drone to support obstacle avoidance.

There are lessons to be learned from that demo that I still carry with me. First, and most important, is that it is necessary to take a systemic approach when implementing obstacle avoidance on a drone. Such a systemic approach will yield insights that would be missed if you look at individual components. In my case from 17 years ago, taking a systemic approach gave me the insight that successful obstacle avoidance required increasing D/R, and the most direct route at the time was to decrease R rather than increase D, in other words make the platform more maneuverable rather than redesign the sensor.

A second lesson learned is that very often “less is more”. There is a benefit to simplicity and eliminating excess that is often lost in practice. I am reminded by a saying attributed to the great automatic engineer Colin Chapman of Lotus- more power makes a race car faster on straight roads while more lightness makes a race car faster everywhere! I think this is an easy lesson to understand, but tough to incorporate because it runs contrary to habits we have developed in society- Pedagogy and society both reward people for working excessively hard and going through the motions to implement a complex solution rather than taking time to identify and implement one that is simpler and more elegant.

I will discuss, in another post, observations and lessons learned from more recent work providing small drones with obstacle avoidance, including whether it is even feasible at the current time for a modular approach. For now, I am curious to learn if others have had similar experiences to the above. Thank You for reading!